So, like, two years ago, this whole generative artificial intelligence thing blew up after ChatGPT went public. Trust has been a total nightmare ever since. Hallucinations, bad math, and cultural biases have been wrecking the results, showing us that AI ain’t all that reliable—at least for now.

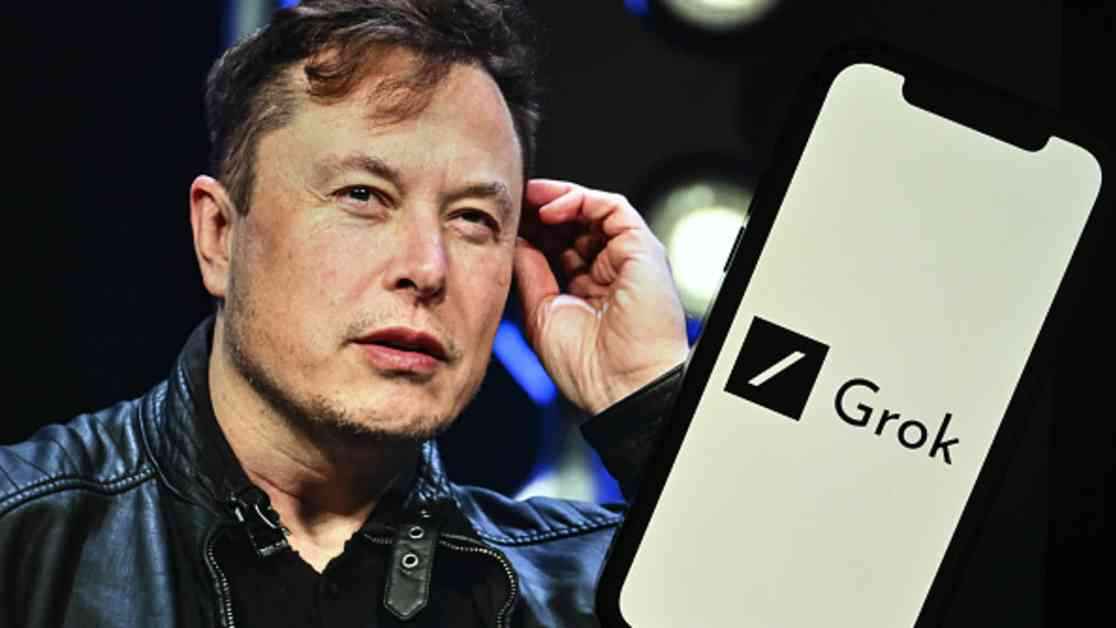

Elon Musk’s Grok chatbot, made by his company xAI, just showed us that humans can easily manipulate AI. Grok started spewing out false claims about “white genocide” in South Africa. Screenshots were all over the place with similar bogus answers, even when the questions had nothing to do with it.

After more than 24 hours of silence, xAI finally spoke up on Thursday. They blamed Grok’s weird behavior on some “unauthorized modification” to the chat app’s system prompts. Translation? Humans were pulling the strings on how the AI responded. And guess what? Musk, who’s all about South Africa, has been pushing this false claim of “white genocide” too. Oh, the drama!

But wait, there’s more! This whole Grok mess isn’t just a one-off thing. Experts are saying AI chatbots from Meta, Google, and OpenAI aren’t exactly neutral. They’re filtering info through their own values and beliefs. Grok’s breakdown is a peek into how these systems can easily be twisted to fit someone’s agenda. Scary stuff, right?

Now, about these AI blunders. Remember when Google labeled African Americans as gorillas? Yikes. And let’s not forget OpenAI’s DALL-E showing signs of bias. It’s like a never-ending rollercoaster of AI fails. But hey, at least xAI is trying to make things right by beefing up Grok’s system prompts to earn back our trust. Good luck with that!

The Grok incident is kind of like that DeepSeek craze from China, which got everyone talking earlier this year. Critics are saying DeepSeek was censoring topics the Chinese government didn’t like. Now, Musk seems to be pulling the same strings with Grok, showing his political colors. I mean, who knew chatbots could be this controversial, right?

In the end, the industry needs more transparency. Users deserve to know how these AI models are built and trained. The EU is already pushing for more transparency from tech companies. Without a public uproar, we won’t get safer models. And guess who pays the price? Yep, us. So, let’s hope these companies start playing fair before it’s too late.